UE 903 NLG

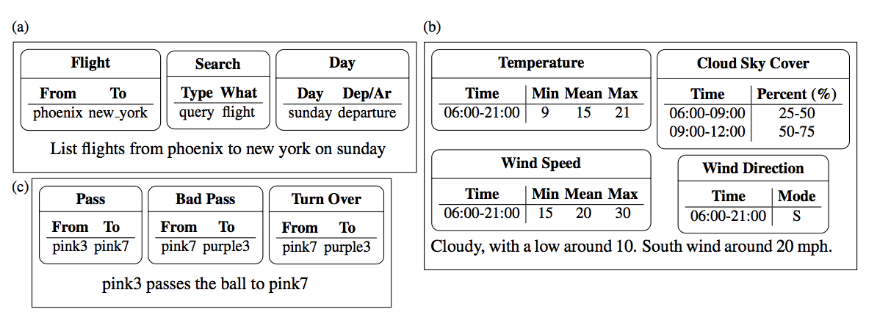

class: center, middle background-image:url(images/data-background-light.jpg) # UE903 EC1: Application to Text ## Natural Language Generation (NLG) ## Lecture 1: Pre-Neural NLG ### Claire Gardent <br><br> <img src="images/lecture1/logos-synalp-transparent.png" width="60%"/> <br><br><br> .footnote[.bold[[Claire Gardent](mailto:claire.gardent@loria.fr) CNRS / LORIA]] --- .left-column[ ## Pre-Neural NLG ] .right-column[ ### Generating from Data * Some Example Data-to-Text Systems * The D2T Pipeline ### Generating from Meaning Representations * Grammar-Based Approaches * Statistical Models ### Generating from Text * Split, Delete, Reorder, Rewrite * Summarisation, Compression, Paraphrasing ] --- class: center, middle, large ## Data-to-Text Generation --- .left-column[ ## Pre-Neural D2T Generation ### Applications ] .right-column[ ## Example System #1: FoG <table> <tr> <td>Function</td><td>Produces textual weather reports in English and French<td></td></tr> <tr><td>Input</td><td>Graphical/numerical weather depiction </td></tr> <tr> <td>User</td><td>Environment Canada (Canadian Weather Service) </td></tr> <tr> <td>Developer</td><td>CoGenTex </td></tr> <tr> <td>Status</td><td>Fielded, in operational use since 1992 </td></tr> </table> .pull-left[ <img src="images/lecture2/foginput.png" width="80%"/> ] .pull-right[ <img src="images/lecture2/fogoutput.png" width="100%"/> ] ] --- .left-column[ ## Pre-Neural D2T Generation ### Applications ] .right-column[ ## Example System #2: PlanDoc <table> <tr><td>Function</td><td>Produces a report describing the simulation options that an engineer has explored <td></td></tr> <tr><td>Input</td><td>A simulation log file <td></td></tr> <tr><td>User</td><td>Southwestern Bell <td></td></tr> <tr><td>Developer</td><td>Bellcore and Columbia University <td></td></tr> <tr><td>Status</td><td>Fielded, in operational use since 1996 <td></td></tr> </table> <br> .pull-left[ RUNID FIBERALL FIBER 6/19/93 ACT YES <br>FA .blue[1301] 2 1995 FA .blue[1201] 2 1995 <br>FA .blue[1401] 2 1995 FA .blue[1501] 2 1995 <br>ANF co 1103 2 1995 48 <br>ANF 1201 1301 2 1995 24 <br>ANF 1401 1501 2 1995 24 <br>END. .blue[856.0 670.2] ] .pull-right[ This saved fiber refinement includes all DLC changes in Run-ID ALLDLC. RUN-ID FIBERALL demanded that PLAN activate fiber for CSAs .blue[1201, 1301, 1401 and 1501] in 1995 Q2. It requested the placement of a 48-fiber cable from the CO to section 1103 and the placement of 24-fiber cables from section 1201 to section 1301 and from section 1401 to section 1501 in the second quarter of 1995. For this refinement, the resulting 20 year route PWE was .blue[$856.00K], a $64.11K savings over the BASE plan and the resulting 5 year IFC was .blue[$670.20K], a $60.55K savings over the BASE plan. ] ] --- .left-column[ ## Pre-Neural D2T Generation ### Applications ] .right-column[ ## Example System #3: TEMSIS <table> <tr><td>Function</td><td>Summarises pollutant information for environmental officials <td></td></tr> <tr><td>Input</td><td>Environmental data + a specific query <td></td></tr> <tr><td>User</td><td>Regional environmental agencies in France and Germany <td></td></tr> <tr><td>Developer</td><td>DFKI GmbH <td></td></tr> <tr><td>Status</td><td>Prototype developed; requirements for fielded system being analysed <td></td></tr> </table> <pre><code data-trim data-noescape> ((LANGUAGE FRENCH) (GRENZWERTLAND GERMANY) (BESTAETIGE-MS T) (BESTAETIGE-SS T) (MESSSTATION "Voelklingen City") (DB-ID "#2083") (SCHADSTOFF "#19") (ART MAXIMUM) (ZEIT ((JAHR 1998) (MONAT 7) (TAG 21)))) </code></pre> Le 21/7/1998 à la station de mesure de Völklingen -City, la valeur moyenne maximale d'une demi-heure (Halbstundenmittelwert) pour l'ozone atteignait 104.0 µg/m³. Par conséquent, selon le decret MIK (MIK-Verordnung), la valeur limite autorisée de 120 µg/m³ n'a pas été dépassée. ] --- .left-column[ ## Pre-Neural D2T Generation ### Applications ] .right-column[ ## Types of D2T Systems <br> | GOAL | APPLICATIONS | |:---- | -----:| | Automated document production | weather forecasts, simulation reports, letters, ... | | Presentation of information to people in an understandable fashion | medical records, expert system reasoning, numerical data, ... | | Teaching | Grammar exercises | | Entertainment | Jokes, stories, poetry | ] --- class: center, middle .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline ] .right-column[ ## The D2T Pipeline <img src="images/lecture2/pipeline.png" width="80%"/> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Content Selection: determining what to say .top[ <img src="images/lecture2/angeli-input.png" width="100%"/> ] .bottom[ * Verbalising all the input data would yield a very redundant, unnatural text. * Content selection selects and structures the input data so as to support the generation of a natural sounding text * Content selection is domain specific ] ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Content Selection .top[ #### Input Data <img src="images/lecture2/angeli-cs.png" width="60%"/> ] <br> <br> .bottom[ #### Output Text *A 20 percent chance of showers after midnight. Increasing clouds, with a low around 48. Southwest wind between 5 and 10 mph.* ] ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Content Selection Selecting content for sportscasting, weather forecast and flight booking.  ] --- <h3 id="session-3-2409-2-5pm">Session 3: 24/09. Statistical Content Selection</h3> <p style="color:#3372FF" ;> ABROUGUI Rim </p> Gabor Angeli, Percy Liang, and Dan Klein. A simple domain-independent probabilistic approach to generation. In <em>Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing</em>, pages 502--512, Cambridge, MA, October 2010. Association for Computational Linguistics. [<a href="https://www.aclweb.org/anthology/D10-1049">http</a>] <blockquote> <font size="-1"> We present a simple, robust generation system which performs content selection and surface realization in a unified, domain-independent framework. In our approach, we break up the end-to-end generation process into a sequence of local decisions, arranged hierarchically and each trained discriminatively. We deployed our system in three different domains—Robocup sportscasting, technical weather forecasts, and common weather forecasts, obtaining results comparable to state-ofthe-art domain-specific systems both in terms of BLEU scores and human evaluation. </font> </blockquote> <p style="color:#3372FF" ;> AFARA Maria </p> Ioannis Konstas and Mirella Lapata. Concept-to-text generation via discriminative reranking. In <em>Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics</em>, pages 369--378, Jeju Island, Korea, July 2012. Association for Computational Linguistics. [<a href="https://www.aclweb.org/anthology/P12-1039">http</a>] <blockquote> <font size="-1"> This paper proposes a data-driven method for concept-to-text generation, the task of automatically producing textual output from non-linguistic input. A key insight in our approach is to reduce the tasks of content selection (“what to say”) and surface realization (“how to say”) into a common parsing problem. We define a probabilistic context-free grammar that describes the structure of the input (a corpus of database records and text describing some of them) and represent it compactly as a weighted hypergraph. The hypergraph structure encodes exponentially many derivations, which we rerank discriminatively using local and global features. We propose a novel decoding algorithm for finding the best scoring derivation and generating in this setting. Experimental evaluation on the ATIS domain shows that our model outperforms a competitive discriminative system both using BLEU and in a judgment elicitation study. </font> </blockquote> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Pre-Neural, Symbolic Content Selection Creates a set of .blue[messages] from input data and other domain/background information * Specific to the application domain * Filter, summarize and process the input data * Can be affected by a user model, history * Can incorporate reasoning and planning algorithms ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Extracting Messages from Rail Timetables Current time: Monday 09:40 |Mon-Fri|t01 | t02 | t03 | t04| t05| Caledonian Express | |:---- | -- | -- | -- | -- | -- | -----:| |Aberdeen | 0533 | 0633 | 0737 | 0842 | 0937 | 1000 | |Dundee | 0651 | 0750 | 0853 | 0952 | 1052 | 1149 | |Perth | 0714 | 0812 | 0915 | 1014 | 1114 | 1211| |Glasgow | 0834 | 0915 | 1014 | 1114 | 1215 | 1314 | ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Message (Selecting) Current time: Monday 09:40 |Mon-Fri|t01 | t02 | t03 | t04| t05| Caledonian Express | |:---- | -- | -- | -- | -- | -- | -----:| |.blue[Aberdeen] | 0533 | 0633 | 0737 | 0842 | 0937 | .blue[1000] | |Dundee | 0651 | 0750 | 0853 | 0952 | 1052 | 1149 | |Perth | 0714 | 0812 | 0915 | 1014 | 1114 | 1211| |Glasgow | 0834 | 0915 | 1014 | 1114 | 1215 | 1314 | <br> <pre><code data-trim data-noescape> Message-id:msg02 Relation:DEPARTURE departing-entity:CALEDON-EXP departure-location:ABERDEEN departure-time:1000 </code></pre> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Message (User Adaptation) .blue[Current time: Monday 09:40 ] |Mon-Fri|t01 | t02 | t03 | t04| t05| .blue[Caledonian Express] | |:---- | -- | -- | -- | -- | -- | -----:| |Aberdeen | 0533 | 0633 | 0737 | 0842 | 0937 | 1000 | |Dundee | 0651 | 0750 | 0853 | 0952 | 1052 | 1149 | |Perth | 0714 | 0812 | 0915 | 1014 | 1114 | 1211| |Glasgow | 0834 | 0915 | 1014 | 1114 | 1215 | 1314 | #### Next train <pre><code data-trim data-noescape> Message-id:msg01 Relation:IDENTITY arg1:NEXT-TRAIN arg2:CALEDON-EXP </code></pre> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Content Selection ] .right-column[ ## Messages (Reasoning) Current time: Monday 09:40 |Mon-Fri|t01 | t02 | t03 | t04| t05| Caledonian Express | |:---- | -- | -- | -- | -- | -- | -----:| |Aberdeen | 0533 | 0633 | 0737 | 0842 | 0937 | 1000 | |Dundee | 0651 | 0750 | 0853 | 0952 | 1052 | 1149 | |Perth | 0714 | 0812 | 0915 | 1014 | 1114 | 1211| |Glasgow | 0834 | 0915 | 1014 | 1114 | 1215 | 1314 | #### Summarising the data <pre><code data-trim data-noescape> Message-id:msg03 Relation:NUMBER-OF-TRAINS-IN-PERIOD traject:ABERDEEN/GLASGOW period:DAILY number:6 </code></pre> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ## Document Planning ### Goal * To determine how to structure the selected information in order to generate a coherent text ### Two Common (Symbolic) Approaches * Methods based on observations about common text structures (.blue[Schemas]) * Methods based on reasoning about discourse coherence and the purpose of the text (.blue[Discourse Structure]) ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Document Planning using Schemas (McKeown 1985) * texts often follow conventionalised patterns * these patterns specify how a particular document plan can be constructed using smaller schemas or atomic messages ### Implementing schemas * simple schemas can be expressed as .bblue[grammars] * more flexible schemas usually implemented as macros or class libraries on top of a conventional programming language, where each schema is a .bblue[procedure] ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline ##### Document Planning ] .right-column[ #### A Simple Schema <pre><code data-trim data-noescape> WeatherSummary -> MonthlyTempMsg MonthlyRainfallMsg RainyDaysMsg RainSoFarMsg </code></pre> #### A More Complex set of Schemata * Recursive * Includes optional elements (in square brackets) <pre><code data-trim data-noescape> WeatherSummary -> TemperatureInformation RainfallInformation TemperatureInformation -> MonthlyTempMsg [ExtremeTempInfo] [TempSpellsInfo] RainfallInformation -> MonthlyRainfallMsg [RainyDaysInfo] [RainSpellsInfo] RainyDaysInfo -> RainyDaysMsg [RainSoFarMsg] </code></pre> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Schemas: Pros and Cons #### Advantages of schemas * Computationally efficient * Allow arbitrary computation when necessary * Naturally support genre conventions * Relatively easy to acquire from a corpus #### Disadvantages * Limited flexibility: require predetermination of possible structures * Limited portability: likely to be domain-specific ] --- .left-column[ ## Preneural D2T Generation ### The D2T Pipeline #### Document Planning ] .right-column[ ### Text Planning using Discourse Theory Texts are coherent by virtue of .blue[relationships that hold between their parts] — relationships like narrative sequence, elaboration, justification ... Discourse-Based Content Planning * specify discourse structure rules * use these rules to dynamically compose texts from constituent elements by reasoning about the role of these elements in the overall text * Typically adopt .blue[AI planning techniques] * A text realises a .blue[plan, with a goal and subplans] * Goal = desired communicative effect * Plan constituents = messages or structures that combine messages (subplans) * Can involve explicit reasoning about the user’s beliefs * Often based on ideas from Rhetorical Structure Theory ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Example Discourse Relations #### Sequence * Two messages can be connected by a SEQUENCE relationship if both have the attribute massage-status = primary #### Elaboration * Two messages can be connected by an ELABORATION relationship if: * they are both have the same message-topic * one of the two messages has message-status = primary #### Contrast * Two messages can be connected by a CONTRAST relationship if: * they both have the same message-topic * they both have the feature absolute-or-relative = relative-to-average * they have different values for relative-difference:direction ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Example RST Tree (Train Example) <br> .middle[ <img src="images/lecture2/trainrst.png" width="80%"/> ] ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Discourse Planning Algorithm * DocumentPlan = StartMessage * MessageSet = MessageSet - StartMessage * repeat * find a rhetorical operator that will allow attachment of a message to the DocumentPlan * attach message and remove from MessageSet * until MessageSet = 0 or no operators apply ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Input Messages and Discourse Tree <pre><code data-trim data-noescape> MonthlyTempMsg ("cooler than average") MonthlyRainfallMsg ("drier than average") RainyDaysMsg ("average number of rain days") RainSoFarMsg ("well below average") RainSpellMsg ("8 days from 11th to 18th") RainAmountsMsg ("amounts mostly small") </code></pre> <img src="images/lecture2/rst.png" width="70%"/> ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Discourse Tree and Output Text <img src="images/lecture2/rst.png" width="100%"/> The month was cooler and drier than average, with the average number of rain days, but the total rain for the year so far is well below average. Although there was rain on every day for 8 days from 11th to 18th, rainfall amounts were mostly small. ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Document Planning ] .right-column[ ### Document Planning (Summary) * Result = Document Plan * a tree structure populated by messages at its leaf nodes * Next step: realising the messages as text ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### From Plan to Text * Referring Expression Generation: Deciding how to describe entities * Lexicalisation: Choosing words for input symbols * Aggregation: Using Ellipsis and Coordination to avoid repetition * Surface Realisation: Choosing the syntactic form of sentences * Sentence segmentation: Segmenting the content into sentence size chunks ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### Generating Referring Expressions Describing entities <pre><code data-trim data-noescape> (John_E_Blaha birthDate 1942_08_26) (John_E_Blaha birthPlace San_Antonio) (John_E_Blaha occupation Fighter_pilot) </code></pre> <br> $\checkmark$ <em><span style="color:blue">John E Blaha</em></span> was born in San Antonio on 1942-08-26. <span style="color:blue"><em>He</em></span> worked as a fighter pilot $\otimes$ <span style="color:blue"><em>John E Blaha</em></span> was born in San Antonio on 1942-08-26. <span style="color:blue"><em>John E Blaha</em></span> worked as a fighter pilot ] --- <h3 id="session-5-0110-10am-12pm">Session 5: 01/10: Neural REG</h3> <p style="color:#3372FF" ;> NADAR Fatima </p> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> Thiago Castro Ferreira, Diego Moussallem, Ákos Kádár, Sander Wubben, and Emiel Krahmer. Neuralreg: An end-to-end approach to referring expression generation. In <em>Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Long Papers)</em>, page 1959–1969, Melbourne, Australia, 2018. [<a href="https://www.aclweb.org/anthology/P18-1182">http</a> ] <blockquote> <font size="-1"> Traditionally, Referring Expression Generation (REG) models first decide on the form and then on the content of references to discourse entities in text, typically relying on features such as salience and grammatical function. In this paper, we present a new approach (NeuralREG), relying on deep neural networks, which makes decisions about form and content in one go without explicit feature extraction. Using a delexicalized version of the WebNLG corpus, we show that the neural model substantially improves over two strong baselines. Data and models are publicly available. </blockquote> </td> </tr> --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### Lexicalisation Choosing lexical items <pre><code data-trim data-noescape> (John_E_Blaha birthDate 1942_08_26) </code></pre> <br> * John E Blaha <span style="color:blue"><em>was born</em></span> on 1942-08-26 * John E Blaha <span style="color:blue"><em>'s birthdate</em></span> is 1942-08-26. ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### Surface Realisation Choosing syntactic structures <pre><code data-trim data-noescape> (John_E_Blaha birthPlace San_Antonio) (John_E_Blaha birthDate 1942_08_26) (John_E_Blaha occupation Fighter_pilot) </code></pre> * John E Blaha, <span style="color:blue"><em>born in San Antonio</em></span>, on 1942-08-26 worked as a fighter pilot * John E Blaha <span style="color:blue"><em>was born in San Antonio</em></span> on 1942-08-26. He worked as a fighter pilot * John E Blaha <span style="color:blue"><em>who was born in San Antonio on 1942-08-26</em></span> worked as a fighter pilot ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### Aggregation Avoiding repetition <pre><code data-trim data-noescape> (John_E_Blaha birthDate 1942_08_26) (John_E_Blaha birthPlace San_Antonio) (John_E_Blaha occupation Fighter_pilot) </code></pre> * $\checkmark$ <span style="color:blue"><em>John E Blaha</em></span> , born in San Antonio on 1942-08-26, worked as a fighter pilot * $\otimes$ <span style="color:blue"><em>John E Blaha was born</em></span> in San Antonio. <span style="color:blue"><em>John E Blaha was born</em></span> on 1942-08-26. <span style="color:blue"><em>John E Blaha</em></span> worked as a fighter pilot ] --- .left-column[ ## Preneural D2T Generation ### Applications ### The D2T Pipeline #### Microplanning ] .right-column[ ### Sentence segmentation Segmenting the content into sentence size chunks <pre><code data-trim data-noescape> (John_E_Blaha birthDate 1942_08_26) (John_E_Blaha birthPlace San_Antonio) (John_E_Blaha occupation Fighter_pilot) </code></pre> * John E Blaha, born in San Antonio on 1942-08-26, worked as a fighter pilot. * John E Blaha was born in San Antonio on 1942-08-26. He worked as a fighter pilot. ] --- class: center, middle, large ## MR-to-Text Generation --- .left-column[ ## Pre-Neural NLG ### D2T ### MR2T ] .right-column[ ### Generating from Meaning Representations #### Some Example Tasks #### Grammar Based Approaches #### Statistical Models ] --- .left-column[ ## MR2T ### Example Tasks ] .right-column[ ### Example #1: Dependency Trees Surface Realization Challenge 2011 and 2018 .bottom[<img src="images/lecture1/deptree2text.png">] ] --- .left-column[ ## MR2T ### Example Tasks ] .right-column[ ### Example #2: Abstract Meaning Representations (AMR) <img src="images/lecture1/amr.png" width="50%"/> *The London emergency services said that altogether 11 people had been sent to hospital for treatment due to minor wounds.* ] --- .left-column[ ## MR2T ### Example Tasks ] .right-column[ ## Example System #3: Dialog Moves .left[ Recommend name[The Eagle], eatType[coffee shop], food[French], priceRange[moderate], customerRating[3/5], area[riverside], kidsFriendly[yes], near[Burger King] ] *The three star coffee shop, The Eagle, gives families a mid-priced dining experience featuring a variety of wines and cheeses. Find The Eagle near Burger King* ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Generating from Description Logic Formulae The input Query is converted to a flat representation **DL**: Professor $\sqcap$ Researcher $\sqcap \; \exists$teach.LogicCourse $\sqcap \; \exists$worksAt.AlicanteUniversity **Flat**: Professor(p) Researcher(p) teach(p c) LogicCourse(c) worksAt(p u) AlicanteUniversity(u) ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Tree Adjoining Grammar * The grammar is a set of trees (rules) * Grammar rules (trees) map words to syntactic trees and semantic representations (flat representation of DL subformulae) * Trees can be combined using substitution ($\downarrow$) or adjunction (*)  <!-- .element: style="float: center; width: 70%" --> ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Step 1: Lexical Selection Trees whose semantics subsume the input are selected (variables are unified)  <!-- .element: style="float: center; width: 70%" --> ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Step 2: Combining trees The tree for "The car" is substituted in the "should run on" tree .bottom[  <!-- .element: style="float: center; width: 70%" --> ] ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Step 2: Combining trees The tree for "diesel" is substituted in the "the car should run on" tree  <!-- .element: style="float: center; width: 40%" --> ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Step 3: Extraction Sentences are generated by extracting the yield of all trees which are syntactically complete and which cover the input semantics  <!-- .element: style="float: center; width: 40%" --> ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ] .right-column[ ### Hybrid Statistical, Grammar-Based Approaches * Grammar-Based approach yields .blue[multiple outputs and intermediate results] * Statistical modules are used to reduce ambiguity * Language models To choose between comparable intermediate results (the black cat/the cat black) * Hypertaggers To prune the initial search space * Rankers To determine the best output ] --- .left-column[ ## MR2T ### Example Tasks ### Grammar-Based Approaches ### Statistical Approaches ] .right-column[ #### Overgenerate and Rank Langkilde 1998 * Generates from AMR * A lexicon and keyword based grammar rules are used to map the input AMR to words * A Language Model is used to extract from the resulting lattice the sentence with highest probability. #### Cascaded Classifiers Bohnet et al. 2010 * Generates from Multilevel Annotated (Parallel) Data * Each Level encodes a different way of representing the output text (from deeper to more surface level representations * For each pair of adjacent levels of annotation, a separate SVM (Support Vector Machine) decoder is learned. ] --- <h3>Session 3: 24/09. Statistical MR2T Generation</h3> <p style="color:#3372FF" ;> AKANI Aduenu </p> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> Irene Langkilde and Kevin Knight. Generation that exploits corpus-based statistical knowledge. In <em>COLING 1998 Volume 1: The 17th International Conference on Computational Linguistics</em>, 1998. [<a href="https://www.aclweb.org/anthology/C98-1112">http</a>] <blockquote> <font size="-1"> We present a simple, robust generation system which performs content selection and surface realization in a unified, domain-independent framework. In our approach, we break up the end-to-end generation process into a sequence of local decisions, arranged hierarchically and each trained discriminatively. We deployed our system in three different domains—Robocup sportscasting, technical weather forecasts, and common weather forecasts, obtaining results comparable to state-ofthe-art domain-specific systems both in terms of BLEU scores and human evaluation. </font> </blockquote> </td> </tr> <p style="color:#3372FF" ;> AKHMETOV Alisher </p> </body> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> Bernd Bohnet, Leo Wanner, Simon Mille, and Alicia Burga. Broad coverage multilingual deep sentence generation with a stochastic multi-level realizer. In <em>Proceedings of the 23rd International Conference on Computational Linguistics (Coling 2010)</em>, pages 98--106, Beijing, China, August 2010. Coling 2010 Organizing Committee. [<a href="https://www.aclweb.org/anthology/C10-1012">http</a>] <blockquote> <font size="-1"> Most of the known stochastic sentence generators use syntactically annotated corpora, performing the projection to the surface in one stage. However, in full-fledged text generation, sentence realization usually starts from semantic (predicate-argument) structures. To be able to deal with semantic structures, stochastic generators require semantically annotated, or, even better, multilevel annotated corpora. Only then can they deal with such crucial generation issues as sentence planning, linearization and morphologization. Multilevel annotated corpora are increasingly available for multiple languages. We take advantage of them and propose a multilingual deep stochastic sentence realizer that mirrors the state-ofthe-art research in semantic parsing. The realizer uses an SVM learning algorithm. For each pair of adjacent levels of annotation, a separate decoder is defined. So far, we evaluated the realizer for Chinese, English, German, and Spanish. </font> </blockquote> </td> </tr> --- class: center, middle, large ## Text-to-Text Generation --- .left-column[ ## Pre-Neural NLG ### D2T ### MR2T ### T2T ] .right-column[ #### Main Tasks #### Four Base Operations #### Approaches ] --- .left-column[ ## T2T ### Main Tasks ] .right-column[ ### Example #1: Summarization .center[<img src="images/lecture1/summarising.png" width="70%">] [Grusky et al., 2018](http://aclweb.org/anthology/N18-1065)<!-- .element: style="text-align: right; float: right; width: 50%; font-size: small;" --> ] --- .left-column[ ## T2T ### Main Tasks ] .right-column[ ### Example #2: Simplification .blue[Complex] In 1964 Peter Higgs published his second paper in Physical Review Letters describing Higgs mechanism which predicted a new massive spin-zero boson for the first time. <br> <br> .blue[Simple] Peter Higgs wrote his paper explaining Higgs mechanism in 1964. Higgs mechanism predicted a new elementary particle. ] --- .left-column[ ## T2T ### Main Tasks ] .right-column[ ### Example #3: Paraphrasing .center[<img src="images/lecture1/paraphrasing.png" width="80%">] [Narayan et al, 2016](http://aclweb.org/anthology/W/W16/W16-6625.pdf)<!-- .element: style="text-align: right; float: right; width: 50%; font-size: small;" --> ] --- .left-column[ ## T2T ### Main Tasks ### Operations ] .right-column[ ### Split ### Rewrite ### Move ### Delete ] --- .left-column[ ## T2T ### Main Tasks ### Operations ] .right-column[ ### Split <img src="images/lecture1/split.png" width="100%" /> ] --- .left-column[ ## T2T ### Main Tasks ### Operations ] .right-column[ ### Move <img src="images/lecture1/reorder.png" width="100%" /> ] --- .left-column[ ## T2T ### Main Tasks ### Operations ] .right-column[ ### Rewrite <img src="images/lecture1/rewrite.png" width="100%" /> ] --- .left-column[ ## T2T ### Main Tasks ### Operations ] .right-column[ ### Delete <img src="images/lecture1/delete.png" width="100%" /> ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ] .right-column[ ### Rule-Based * Manually specify rules for splitting, reordering, deleting and splitting ### Statistical * Learn the four operations * Training Data: Simple and Normal Wikipedia ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ### Summarization ] .right-column[ ### Abstractive vs. Extractive Summarization <img src="images/lecture3/xtr-abstr-sum.png" width = "80%" /> * Human summarization (ABSTRACTIVE) * extract key information from input document * aggregate this information (remove redundancies) * abstract (produce coherent text) * Automatic text summarization (mostly EXTRACTIVE) * extract key sentences from the input document * combine them to form a summary. ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ### Summarization ] .right-column[ ### Constraints on Extractive Summary The generated summary * should not exceed a given **length** * should contain all **relevant information** and * should **avoid repetitions**. ### Three Main Steps * create an intermediate representation of the input sentences * score these sentences based on that representation * create a summary by selecting the highest scoring sentences ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ### Summarization ] .right-column[ ### Three main ways of representing text * Frequency-based * Probability * LLR * TF*IDF * Graph * Semantics * Lexical chains * Latent Semantic Analysis * Discourse Structure * Vectorial ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ### Summarization ] .right-column[ ### Lexical Frequency (Luhn 1958) * In a document, words that are .blue[frequent] are descriptive of the document's content * The .blue[sentences] that convey the most important information in a document are those that contain many such descriptive words. * Extract important sentences ] --- .left-column[ ## T2T ### Main Tasks ### Operations ### Approaches ### Summarization ] .right-column[ ### Frequency approaches * use .blue[word probability] to identify those words that represent a document topic and a .blue[frequency threshold] to identify frequent content (non stop) words in a document as descriptive of the document's topic. * (Conroy et al. 2006) used the .blue[log-likelihood ratio] test to extract those words that have a likelihood statistic greater than what one would expect by chance. * Other approaches have used .blue[TF.IDF ratio] ] --- <h3>Session 4: 26/09. Statistical and Symbolic T2T Generation</h3> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> <body> <p style="color:#3372FF" ;> BALARD Srilakshmi </p> </body> Advaith Siddharthan. Text Simplification using Typed Dependencies: A Comparison of the Robustness of Different Generation Strategies. In <em>Proceedings of the 13th European Workshop on Natural Language Generation (ENLG),</em>, pages 2--, Nancy, France, September 2011. Association for Computational Linguistics. [<a href="https://www.aclweb.org/anthology/W11-2802">http</a> ] <blockquote> <font size="-1"> We present a framework for text simplification based on applying transformation rules to a typed dependency representation produced by the Stanford parser. We test two approaches to regeneration from typed dependencies: (a) gen-light, where the transformed dependency graphs are linearised using the word order and morphology of the original sentence, with any changes coded into the transformation rules, and (b) gen-heavy, where the Stanford dependencies are reduced to a DSyntS representation and sentences are generating formally using the RealPro surface realiser. The main contribution of this paper is to compare the robustness of these approaches in the presence of parsing errors, using both a single parse and an n-best parse setting in an overgenerate and rank approach. We find that the gen-light approach is robust to parser error, particularly in the n-best parse setting. On the other hand, parsing errors cause the realiser in the genheavy approach to order words and phrases in ways that are disliked by our evaluators. </font> </blockquote> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> <body> <p style="color:#3372FF" ;> BOUZIGUES Aymeric </p> </body> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> Zhemin Zhu, Delphine Bernhard, and Iryna Gurevych. A monolingual tree-based translation model for sentence simplification. In <em>Proceedings of the 23rd International Conference on Computational Linguistics (Coling 2010)</em>, pages 1353--1361. Coling 2010 Organizing Committee, 2010. [<a href="http://aclweb.org/anthology/C10-1152">http</a>] <blockquote> <font size="-1"> In this paper, we consider sentence simplification as a special form of translation with the complex sentence as the source and the simple sentence as the target. We propose a Tree-based Simplification Model (TSM), which, to our knowledge, is the first statistical simplification model covering splitting, dropping, reordering and substitution integrally. We also describe an efficient method to train our model with a large-scale parallel dataset obtained from the Wikipedia and Simple Wikipedia. The evaluation shows that our model achieves better readability scores than a set of baseline systems. </font> </blockquote> <p> </td> </tr> --- <h3>Session 4: 26/09. Statistical and Symbolic T2T Generation</h3> <p style="color:#3372FF" ;> DIEUDONAT Lea </p> </body> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> JohnM. Conroy, JudithD. Schlesinger, and DianneP. O'Leary. Topic-focused multi-document summarization using an approximate oracle score. In <em>Proceedings of the COLING/ACL 2006 Main Conference Poster Sessions</em>, pages 152--159, Sydney, Australia, July 2006. Association for Computational Linguistics. [<a href="https://www.aclweb.org/anthology/P06-2020">http</a>] <blockquote> <font size="-1"> We consider the problem of producing a multi-document summary given a collection of documents. Since most successful methods of multi-document summarization are still largely extractive, in this paper, we explore just how well an extractive method can perform. We introduce an “oracle” score, based on the probability distribution of unigrams in human summaries. We then demonstrate that with the oracle score, we can generate extracts which score, on average, better than the human summaries, when evaluated with ROUGE. In addition, we introduce an approximation to the oracle score which produces a system with the best known performance for the 2005 Document Understanding Conference (DUC) evaluation. </font> </blockquote> --- <h3>Session 4: 26/09. Evaluation</h3> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> <body> <p style="color:#3372FF";>GUILLAUME Maxime</p> </body> Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. Bleu: a method for automatic evaluation of machine translation. In <em>Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics</em>, pages 311--318, Philadelphia, Pennsylvania, USA, July 2002. Association for Computational Linguistics. [ <a href="biblio_bib.html#papineni-etal-2002-bleu">bib</a> | <a href="http://dx.doi.org/10.3115/1073083.1073135">DOI</a> | <a href="https://www.aclweb.org/anthology/P02-1040">http</a> ] <blockquote><font size="-1"> Human evaluations of machine translation are extensive but expensive. Human evaluations can take months to finish and involve human labor that can not be reused. We propose a method of automatic machine translation evaluation that is quick, inexpensive, and language-independent, that correlates highly with human evaluation, and that has little marginal cost per run. We present this method as an automated understudy to skilled human judges which substitutes for them when there is need for quick or frequent evaluations. </font></blockquote> <p> </td> </tr> <h3>Session 5: 01/10. Evaluation</h3> <tr valign="top"> <td align="right" class="bibtexnumber"> </td> <td class="bibtexitem"> <body> <p style="color:#3372FF";>HAN Kelvin</p> </body> Chin-Yew Lin. ROUGE: A package for automatic evaluation of summaries. In <em>Text Summarization Branches Out</em>, pages 74--81, Barcelona, Spain, July 2004. Association for Computational Linguistics. [ <a href="biblio_bib.html#lin-2004-rouge">bib</a> | <a href="https://www.aclweb.org/anthology/W04-1013">http</a> ] <blockquote><font size="-1"> ROUGE stands for Recall-Oriented Understudy for Gisting Evaluation. It includes measures to automatically determine the quality of a summary by comparing it to other (ideal) summaries created by humans. The measures count the number of overlapping units such as n-gram, word sequences, and word pairs between the computer-generated summary to be evaluated and the ideal summaries created by humans. This paper introduces four different ROUGE measures: ROUGE-N, ROUGE-L, ROUGE-W, and ROUGE-S included in the ROUGE summarization evaluation package and their evaluations. Three of them have been used in the Document Understanding Conference (DUC) 2004, a large-scale summarization evaluation sponsored by NIST. </font></blockquote> <p> </td> </tr>