Articulatory copy synthesis

Videos can be read correctly on Mac or with Chromium.

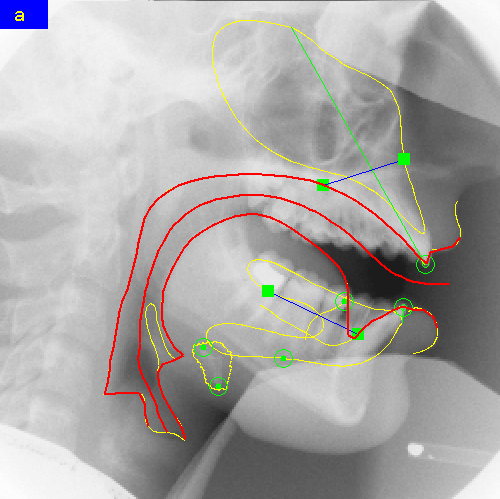

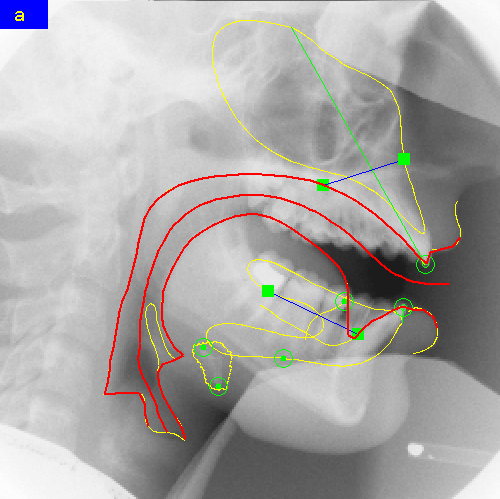

The objective is to synthesize speech from films of the

vocal tract (database of Institut de Phonétique de Strasbourg). The

articulators, tongue in particular, have been delineated by hand or

semi-automatically by means of Xarticulator software. The following film

shows the X-ray images together with the contours.

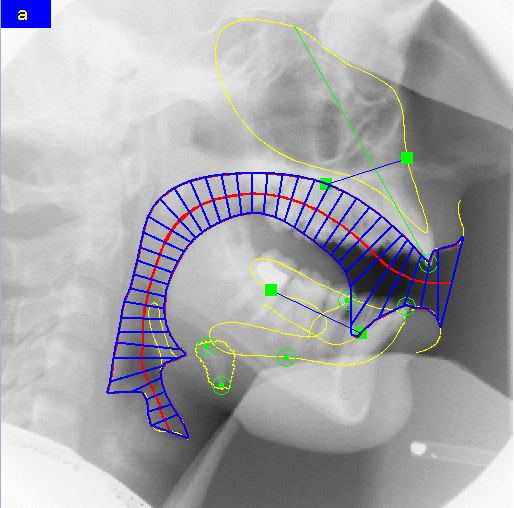

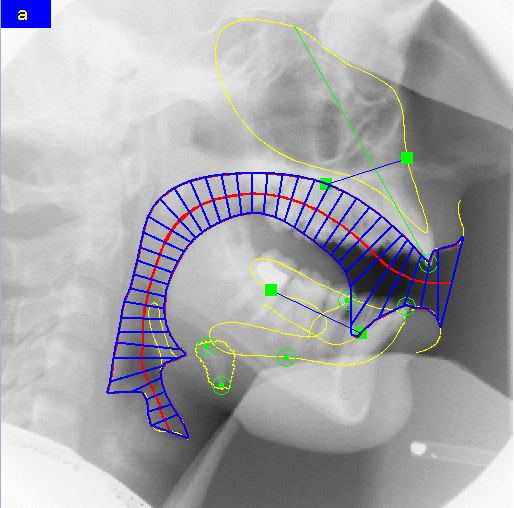

Then, the vocal tract of each image (one every 25 ms) is been transformed

into an area function, which is fed into the acoustic simulation.

|

|

The acoustic signal is synthesized following the strategy proposed by

Maeda (S. Maeda, "Phoneme as concatenable units: VCV synthesis using a

vocal tract

synthesizer", in Sound Patterns of Connected Speech: Description,

Models and Explanation, Proceedings of the symposium held at Kiel

University, 1996).

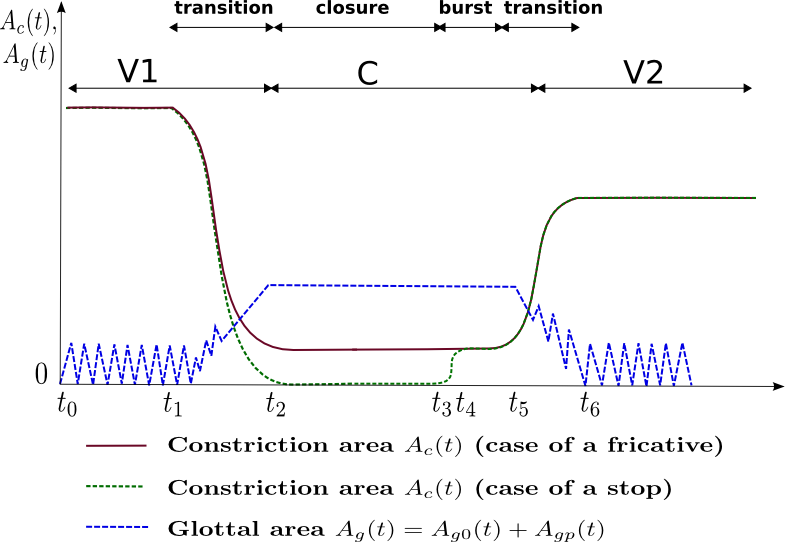

The key point is to design a correct synchronization between the

excitation source and the images giving the geometry of the vocal tract.

Indeed, there are few images (one every 25 ms). The following figure shows

the schematic timing scenario to control the acoustic simulation.

F0 and time points have been determined from the original speech signal. Since images of the vocal tract are not available at each of the time points (t0, t1... t6), it is necessary to duplicate some images.

| Here is the synthetic speech signal for the video at top page: |

Here is a second example with VCVs (video and synthesized speech signal).

| video |

synthetic speech signal |

This project has been founded by LORIA 2012 and realized by Yves Laprie

and Matthieu Loosvelt with the help of Shinji Maeda.