| Field: social robotics and human-robot insteraction | |

|---|---|

Bio-Inspired Object Reference Recognition in Human-Robot Interaction under Ambiguous Non-Verbal CuesRead the article at : HAL (preprint) |

|

| Object reference recognition in human-robot interaction (HRI) is a challenging research problem, particularly under ambiguous non-verbal cues. This paper proposes a bio-inspired multi-modal fusion algorithm to enable robots to recognize object references based on human gaze and pointing gestures. The proposed method integrates and encodes sensory inputs into a dynamic neural field, allowing the robot to adaptively resolve ambiguities in object referencing. The model was evaluated in an experimental setting where participants interacted with a humanoid robot, Furhat, in an object-referencing task. Results demonstrated that the system effectively identified referenced objects with significantly higher accuracy when both gaze and pointing cues were combined. Additionally, subjective evaluations using the Godspeed questionnaire indicated that participants perceived the robot more favorably when it engaged in joint attention behaviors. The findings highlight the potential of dynamic neural models in improving intuitive and seamless HRI by addressing non-verbal ambiguity in shared workspaces. Future work will explore improved gaze-tracking techniques and closed-loop interaction models to enhance system robustness and adaptability. | |

AEGO: Modeling Attention for HRI in Ego-Sphere Neural NetworksRead the article at : IROS 2024 |

|

| Despite important progress in recent years, social robots are still far away from showing advanced behavior for interaction and adaptation in human environments. Thus, we are interested in studying social cognition in human-robot interaction (HRI), notably in improving communication skills relying on joint attention (JA) and knowledge sharing. Since JA involves low-level cognitive processes in humans, we take into account the implications of Moravec’s Paradox and focus on the aspect of knowledge representation. Inspired by 4E cognition principles, we study egocentric localization through the concept of sensory ego-sphere. We propose a neural network architecture named AEGO to model attention for each agent in interaction and show how to fuse information in a common representation space. From the perspective of dynamic fields theory, AEGO takes into account the dynamics of bottom-up and top-down modulation processes and the effects of neural excitatory and inhibitory synaptic interaction. In this work we evaluate the model in simulation and experiments with the robot Pepper in JA tasks based on proprioception, vision, rudimentary natural language and Hebbian plasticity. Results show that AEGO is convenient for HRI, allowing the human and the robot to share attention and knowledge about objects in scenarios close to everyday situations. AEGO constitutes a novel brain-inspired architecture to model attention that is suitable for multi-agent applications relying on social cognition skills, having the potential to generalize to several robotics platforms and HRI scenarios. direct interaction. Furthermore, as a multi-dimensional construct, JA involves cognitive skills which constitute forms of social attention at distinct levels of interaction and knowledge sharing, which must be considered in HRI. | |

TOP-JAM: A bio-inspired topology-based model of joint attention for human-robot interactionRead the article at: ICRA-2023 | |

| Coexisting with others and interacting in society implies sharing knowledge and attention about world objects, events, features, episodes, and even imagination or abstract ideas in time and space. Inspired by human phenomenological, cognitive and behavioral research, this work focuses on the study of joint attention (JA) for human-robot interaction (HRI), based on two main assumptions: a) the perception and representation of attention jointness constitute an isomorphic relation, and b) inspiration on dynamic neural fields (DNF) theory is a promising way to investigate contextual and non-linear spatio-temporal relations underlying attention and knowledge sharing in HRI. Taking into account the previous considerations, we propose a topology-based model for JA named TOP-JAM, which is able to represent and track in real-time JA states, from observations of behavioral data. More importantly, the model consists in a representation that can be directly understood by human beings, which conforms to robo-ethical principles in social robotics. This study evaluates computational properties of the model in simulation. Through a real experiment with the robot Pepper, the study shows that TOP-JAM is able to track JA in a triad interaction scenario. |

Hybrid human-neurorobotics approach to primary intersubjectivity via active inferenceRead the articles at : ICRA-2020 and Front Psychol |

|

Interdisciplinary efforts from developmental psychology, phenomenology, and philosophy of mind, have studied the rudiments of social cognition and conceptualized distinct forms of intersubjective communication and interaction at human early life. Interaction theorists consider primary intersubjectivity a non-mentalist, pre-theoretical, non-conceptual sort of processes that ground a certain level of communication and understanding, and provide support to higher-level cognitive skills. We study primary intersubjectivity as a second person perspective experience characterized by predictive engagement, where perception, cognition, and action are accounted for an hermeneutic circle in dyadic interaction. Hence, from the perspective of neurorobotics and our interpretation of the concept of active inference in free-energy principle theory, we investigate the relation between intentionality, motor compliance, cognitive compliance, and behavior emergence; within a PVRNN neural network model. Our experiments with the humanoid Torobo portrait interesting perspectives for the bio-inspired study of developmental and social processes, resulting in potential applications in the fields of educational technology and cognitive rehabilitation, among others. |

|

| Field: Psychology | |

|---|---|

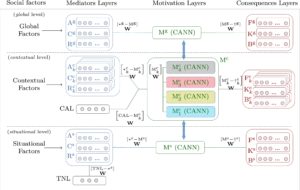

A dynamic computational model of motivation based on self-determination theory and CANNRead the article at: Information Sciences |

|

The hierarchical model of intrinsic and extrinsic motivation (HMIEM) is a framework based on the principles of self-determination theory (SDT) which describes human motivation from a multilevel perspective, and integrates knowledge on personality and social psychological determinants of motivation and its consequences. Although over the last decades HMIEM has grounded numerous correlational studies in diverse fields, it is conceptually defined as a schematic representation of the dynamics of motivation, that is not suitable for human and artificial agents research based on tracking. In this work we propose an analytic description named dynamic computational model of motivation (DCMM), inspired by HMIEM and based on continuous attractor neural networks, which consists in a computational framework of motivation. In DCMM the motivation state is represented within a self-determination continuum with recurrent feedback connections, receiving inputs from heterogeneous layers. Through simulations we show the modeling of complete scenarios in DCMM. A field study with faculty subjects illustrates how DCMM can be provided with data from SDT constructs observations. We believe that DCMM is relevant for investigating unresolved issues in HMIEM, and potentially interesting to related fields, including psychology, artificial intelligence, behavioral and developmental robotics, and educational technology.

| Field: Underwater Robotics | |

|---|---|

Neural network for black-box fusion of underwater robot localization under unmodeled noiseRead the articles at: Rob Auton Syst and IROS-2018 |

|

The research on autonomous robotics has focused on the aspect of information fusion from redundant estimates. Choosing a convenient fusion policy, that reduces the impact of unmodeled noise, and is computationally efficient, is an open research issue. The objective of this work is to study the problem of underwater localization which is a challenging field of research, given the dynamic aspect of the environment. For this, we explore navigation task scenarios based on inertial and geophysical sensory. We propose a neural network framework named B-PR-F which heuristically performs adaptable fusion of information, based on the principle of contextual anticipation of the localization signal within an ordered processing neighborhood. In the framework black-box unimodal estimations are related to the task context, and the confidence on individual estimates is evaluated before fusing information. A study conducted in a virtual environment illustrates the relevance of the model in fusing information under multiple task scenarios. A real experiment shows that our model outperforms the Kalman Filter and the Augmented Monte Carlo Localization algorithms in the task. We believe that the principle proposed can be relevant to related application fields, involving the problem of state estimation from the fusion of redundant information.

| Field: Cognitive Robotics | |

|---|---|

Grounding Humanoid Visually Guided Walking: From Action-independent to Action-oriented KnowledgeRead the articles: Information Sciences |

|

In the context of humanoid and service robotics, it is essential that the agent can be positioned with respect to objects of interest in the environment. By relying mostly on the cognitivist conception in artificial intelligence, the research on visually guided walking has tended to overlook the characteristics of the context in which behavior occurs. Consequently, considerable efforts have been directed to define action-independent explicit models of the solution, often resulting in high computational requirements. In this study, inspired by the embodied cognition research, our interest has focused on the analysis of the sensory-motor coupling. Notably, on the relation between embodiment, information, and action-oriented representation. Hence, by mimicking human walking, a behavior scheme is proposed and endowed the agent with the skill of approaching stimuli. A significant contribution to object discrimination was obtained by proposing an efficient visual attention mechanism, that exploits the redundancies and the statistical regularities induced in the sensory-motor coordination, thus the information flow is anticipated from the fusion of visual and proprioceptive features in a Bayesian network. The solution was implemented on the humanoid platform Nao, where the task was accomplished in an unstructured scenario.