My goal is to bring robots closer to humans, taking into account their feedback into the robot learning and control processes. To this end, I focus on the social and physical interaction between the robot and the human.

Some recent topics:

=> Whole-body dynamics estimation and control of physical interaction

|

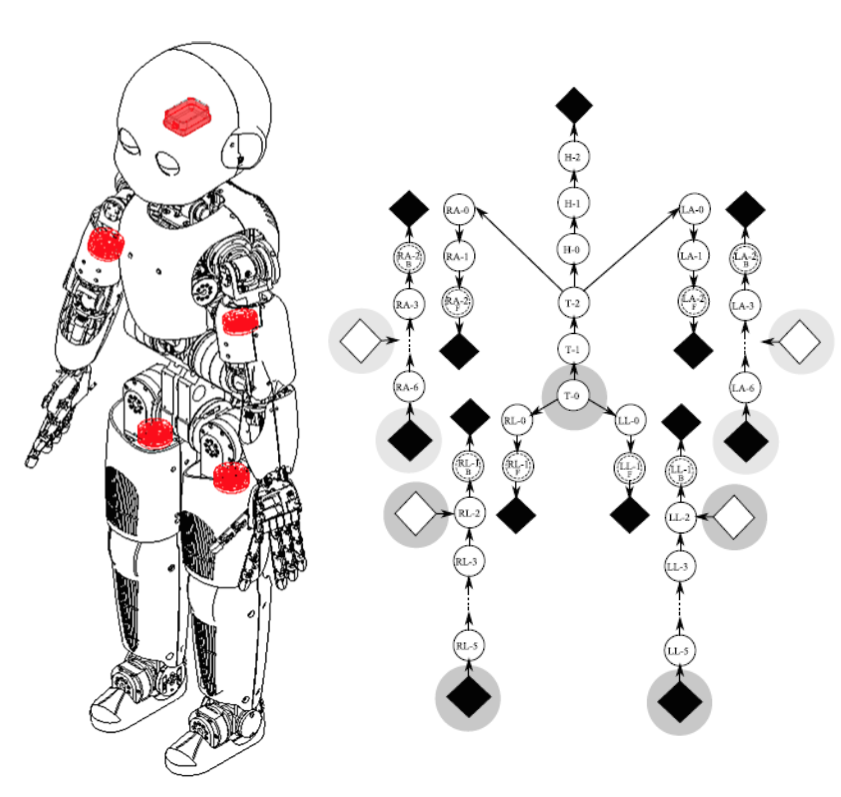

To interact physically, the robot needs a reliable estimation of its whole-body dynamics and its contact forces. For robots such as iCub, not equipped with joint torque sensors, the main problems are: 1) how to retrieve the interaction forces without dedicated sensors, in presence of multiple contacts in arbitrary locations 2) how to estimate the joint torques in such conditions. During my PhD in IIT, we developed the theory of the Enhanced Oriented Graphs (EOG), that makes it possible to compute online the whole-body robot dynamics combining the measurements of force/torque, inertial and tactile sensors. This estimation method has been implemented in the library iDyn, included in the iCub software. More generally, I am interested in the estimation and control of the robot when it interacts physically with rigid/non-rigid environments and humans, now investigated mainly within the CODYCO project.

References: survey paper robotic simulators , Humanoids 2011, ICRA 2015,

Autonomous Robots 2012

|

|

=> Human-robot interaction (with ordinary people)

|

|

When the robot interacts with a human, we must look at the whole set of exchanged signals: physical (i.e., exchanged forces) and social (verbal and non-verbal).

Is it possible to alter the dynamics of such signals by playing with the robot behavior? Is the issue of these signals influenced by individual factors?

I am interested in studying these signals during human-robot collaborative tasks, especially when agnostic/ordinary people without robotics background interact with the robot.

These elements are investigated mainly in the project EDHHI. |

=> Multimodal deep-learning for robotics

|

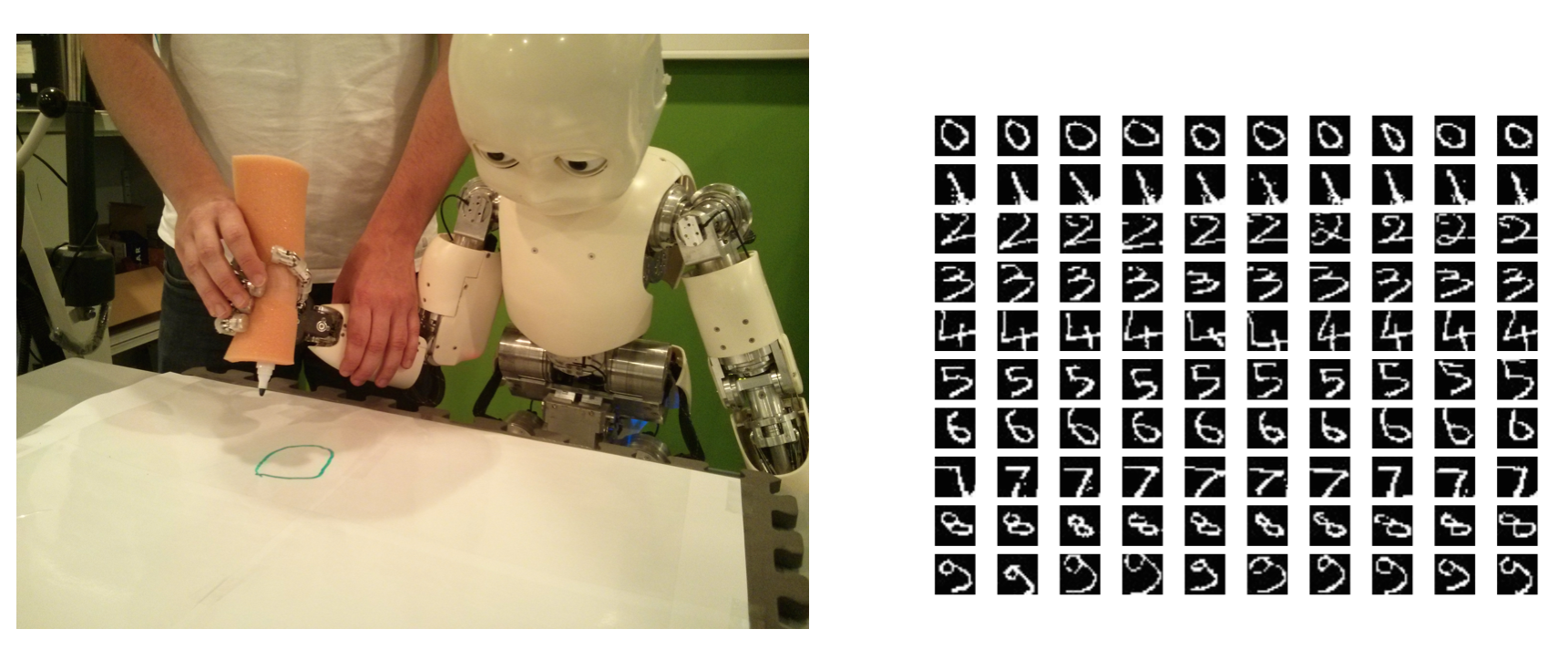

We proposed an architecture based on auto-encoders for multimodal learning of robot skills. We applied the architecture to the problem of learning to draw numbers, combining visual, proprioceptual and auditory information.

|

=> Dealing with uncertainty

|

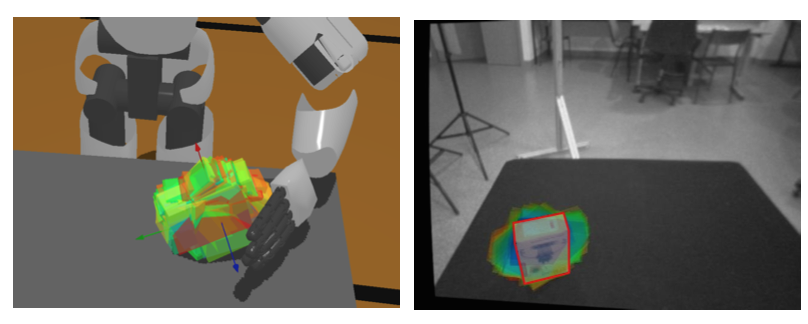

The real world is uncertain and full of noise: as humans do, the robot should take into account this uncertainty before acting and to compute its action. We proposed a new grasp planning method that explicitly takes into account the uncertainty in the object pose estimated by the robot noisy cameras through a point cloud.

|

|

=> Multimodal and active learning

|

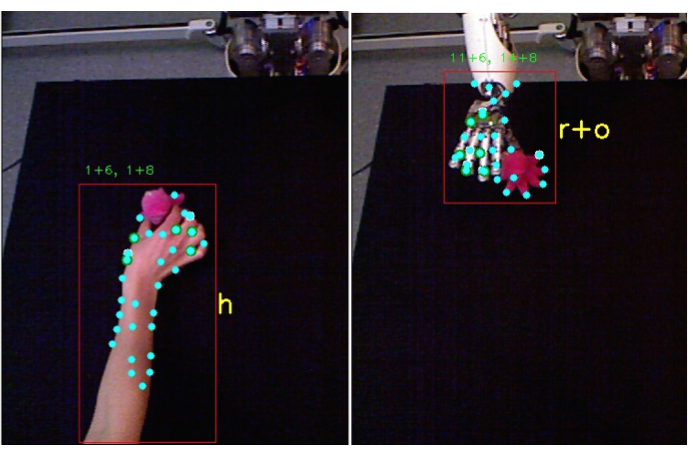

Interaction, for me, also implies multimodal and active exploration/learning. In the MACSI project we took inspiration from infant development to make the iCub learn incrementally through active exploration and social interaction, exploiting its multiple sensors. Multimodality was fundamental to improve object recognition, discriminate human and robot body from objects, track the human partner and his gaze during interactions, etc.

Key references:

IROS 2012, Humanoids 2012, ROBIO 2013, TAMD 2014

|

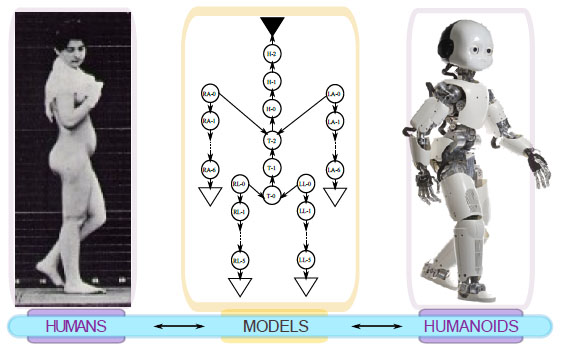

=> From Humans to Humanoids

|

Is it possible to transfer some principles of learning and control from humans to robots?

This question has been investigated during my PhD in IIT, where we used stochastic optimal control for controlling the robot movement and compliance in presence of uncertainties, noise or delays. Then during my postdoc in ISIR, where we took inspiration from developmental psychology and learning in toddlers to develop a cognitive architecture for the iCub to learn in an incremental and multimodal way objects through autonomous exploration and interaction with a human tutor.

|