My research is at the intersection of robotics, learning, control and interaction. I am more and more interested into developing collaborative robots, that are able to interact physically and socially with humans, especially those that are not experts in robotics. I am currently interested in two forms of collaboration: the human-humanoid collaboration, in the form of teleoperation; and the human-cobot or human-exoskeleton physical collaboration. To collaborate, the robot needs a very smart human-aware whole-body control: Human-aware here means that it has to consider the human status, its intent, and its optimization criteria. The robot needs good models of human behavior, and that is why I am interested into human-robot interaction.

My goal is to “close the loop” on the human, taking into account their feedback into the robot learning and control process. I am also interested in questions related to the “social” impact of collaborative robotics technologies on humans, for example the robot acceptance and trust.

Tele-operation of humanoid robots

This research started during the project AnDy, where we were facing the problem of teaching collaborative whole-body behaviors to the iCub. In short, whole-body teleoperation is the whole-body version of kinesthetic teaching for learning from demonstration. We developed the tele-operation of the iCub robot, showing that it is possible to replicate the human operator’s movements even if the two systems have different dynamics and the operator could even make the robot fall (Humanoids 2018). We also optimized the humanoid’s whole-body movements, demonstrated by the human, for the robot’s dynamics (Humanoids 2019). Later, we proposed a multimode teleoperation framework that enabled an immersive experience where the operator was watching the robot’s visual feedback inside a VR headset (RAM 2019). To improve the precision in tracking the human’s desired trajectory, we proposed to optimize the whole-body controller’s parameters with the purpose of being “generic” (RA-L 2020). Finally, we focused on the problem of tele-operating the robot in presence of large delays (up to 2 seconds), proposing the technique “prescient teleoperation” that consists in anticipating the human operator using human motion prediction models (arXiv 2021 – under review). We have demonstrated our teleoperation suite on two humanoid robots: iCub and Talos.

Exoskeletons

This line of research started during the project AnDy. We collaborated with the consortium and particularly with Ottobock gmbh to validate their passive exoskeleton Paexo for overhead works (TNSRE 2019). Later, during covid-19 pandemic, we used our skills with assistive exoskeletons to help the physicians of the University Hospital of Nancy, who used a passive exoskeleton for back assistance during the prone positioning maneuvers of covid patients in the ICU (project ExoTurn, AHFE 2020). We are currently studying active upperbody exoskeletons in the project ASMOA.

Machine learning to improve whole-body control

This line of research started during the project CoDyCo, where we explored how to use machine learning techniques to improve the whole-body control of iCub, and the control of its contacts. We learned contact models to improve the inverse dynamics in ICRA 2015, then learned how to improve torque control in presence of contacts in HUMANOIDS 2015. We didn’t scale to the full humanoid though. At the same time, to enable balancing on our humanoid we were developing whole-body controllers based on multi-task QP controllers, which have the known problem of requiring tedious expert tuning of task priorities and trajectories. So we started to explore how to automatically learn optimal weights and trajectories for whole-body controllers: in ICRA 2016 we showed that it was possible to learn the evolution of the task weights in time for complex motions of redundant manipulators; in HUMANOIDS 2016 we scaled the problem to the humanoid case, while ensuring that the optimized weights were “safe”, i.e., never violating any of the problem constraints – and for this we benchmarked different constrained optimization algorithms; in HUMANOIDS 2017 we applied our learning method to optimize task trajectories rather than task priorities, as in many multi-task QP controllers the task priorities are fixed but the trajectories still need to be optimized, and they can be critical as in some challenging balancing problems. This line of research started during the project CoDyCo, where we explored how to use machine learning techniques to improve the whole-body control of iCub, and the control of its contacts. We learned contact models to improve the inverse dynamics in ICRA 2015, then learned how to improve torque control in presence of contacts in HUMANOIDS 2015. We didn’t scale to the full humanoid though. At the same time, to enable balancing on our humanoid we were developing whole-body controllers based on multi-task QP controllers, which have the known problem of requiring tedious expert tuning of task priorities and trajectories. So we started to explore how to automatically learn optimal weights and trajectories for whole-body controllers: in ICRA 2016 we showed that it was possible to learn the evolution of the task weights in time for complex motions of redundant manipulators; in HUMANOIDS 2016 we scaled the problem to the humanoid case, while ensuring that the optimized weights were “safe”, i.e., never violating any of the problem constraints – and for this we benchmarked different constrained optimization algorithms; in HUMANOIDS 2017 we applied our learning method to optimize task trajectories rather than task priorities, as in many multi-task QP controllers the task priorities are fixed but the trajectories still need to be optimized, and they can be critical as in some challenging balancing problems.In the project ANDY, I am pushing this line of research so as to realize robust and safe whole-body control, which we need for human-robot collaboration. For example, in HUMANOIDS 2017 we used trail-and-error algorithms to adapt in few trials on the real robot a QP controller that was good for the simulated robot, but not for the real robot (reality gap problem). |

Related topics:

|

Human-robot interaction (social & physical)

When the robot interacts with a human, we must look at the whole set of exchanged signals: physical (i.e., exchanged forces) and social (verbal and non-verbal, i.e., gaze, speech, posture). Is it possible to alter the dynamics of such signals by playing with the robot behavior? Is the production and the dynamics of these signals influenced by individual factors? When the robot interacts with a human, we must look at the whole set of exchanged signals: physical (i.e., exchanged forces) and social (verbal and non-verbal, i.e., gaze, speech, posture). Is it possible to alter the dynamics of such signals by playing with the robot behavior? Is the production and the dynamics of these signals influenced by individual factors?I am interested in studying these signals during human-robot collaborative tasks, especially when agnostic/ordinary people without robotics background interact with the robot. We showed that the robot’s proactivity changes the rhythm of interaction (Frontiers 2014 ); that extroversion and negative attitude towards robots appear in the dynamics of gaze and speech during collaborative tasks (IJSR 2016). In the project CoDyCo, I focused on the analysis of physical signals (e.g., contact forces, tactile signals) during a collaborative task requiring physical contact between humans and iCub. In the project ANDY, I am pushing this line of research so as to realize efficient multimodal collaboration between humans and humanoids. |

Related topics:

|

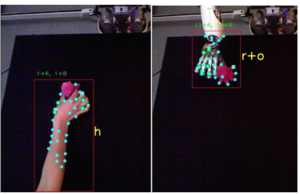

Multimodal learning and deep-learning for robotics

I have been using neural networks since my master thesis, for optimal coding/decoding using game theory (IJCNN 2009), then in my PhD thesis for learning sequences of optimal controllers in a model predictive control framework (IROS 2010). During my postdoc in ISIR, we proposed an architecture based on auto-encoders for multimodal learning of robot skills (ICDL 2014). We applied the architecture to the iCub, to solve the problem of learning to draw numbers, combining visual, proprioceptual and auditory information (RAS 2014 ). We showed that using a multimodal framework we can not only improve classification, but also compensate for missing modalities in recognition. I have been using neural networks since my master thesis, for optimal coding/decoding using game theory (IJCNN 2009), then in my PhD thesis for learning sequences of optimal controllers in a model predictive control framework (IROS 2010). During my postdoc in ISIR, we proposed an architecture based on auto-encoders for multimodal learning of robot skills (ICDL 2014). We applied the architecture to the iCub, to solve the problem of learning to draw numbers, combining visual, proprioceptual and auditory information (RAS 2014 ). We showed that using a multimodal framework we can not only improve classification, but also compensate for missing modalities in recognition.This line of research is now pursued in the ANDY project, for classifying and recognizing human actions from several types of sensors. |

Related topics:

|

Past topics:

Multimodal and active learning

In the MACSI project we took inspiration from infant development to make the iCub learn incrementally through active exploration and social interaction with a tutor, exploiting its multitude of sensors (TAMD 2014). In the MACSI project we took inspiration from infant development to make the iCub learn incrementally through active exploration and social interaction with a tutor, exploiting its multitude of sensors (TAMD 2014).Multimodality was fundamental in many ways: to improve object recognition (ROBIO 2013), discriminate human and robot body from objects (AURO 2016), by combining visual and proprioceptive information; to track the human partner and his gaze during interactions (BICA 2012, Humanoids 2012), by combining audio and visual information, etc.Instructions for reproducing the experiments: online documentation, code (svn repository) |

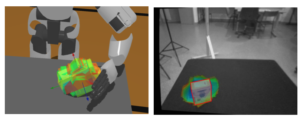

Dealing with uncertainty in object’s pose estimation

The real world is uncertain and full of noise: as humans do, the robot should take into account this uncertainty before acting and to compute its action. We proposed a new grasp planning method that explicitly takes into account the uncertainty in the object pose estimated by the robot noisy cameras through a sparse point cloud. The real world is uncertain and full of noise: as humans do, the robot should take into account this uncertainty before acting and to compute its action. We proposed a new grasp planning method that explicitly takes into account the uncertainty in the object pose estimated by the robot noisy cameras through a sparse point cloud.

References: RAS 2014 |

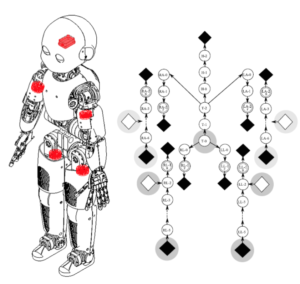

Whole-body dynamics estimation and control of physical interaction

To interact physically, the robot needs a reliable estimation of its whole-body dynamics and its contact forces. For robots such as iCub, not equipped with joint torque sensors, the main problems are: 1) how to retrieve the interaction forces without dedicated sensors, in presence of multiple contacts in arbitrary locations 2) how to estimate the joint torques in such conditions. During my PhD in IIT, we developed the theory of the Enhanced Oriented Graphs (EOG), that makes it possible to compute online the whole-body robot dynamics combining the measurements of force/torque, inertial and tactile sensors. This estimation method has been implemented in the library iDyn, included in the iCub software (now significantly improved in iDynTree thanks to the CoDyCo project). To interact physically, the robot needs a reliable estimation of its whole-body dynamics and its contact forces. For robots such as iCub, not equipped with joint torque sensors, the main problems are: 1) how to retrieve the interaction forces without dedicated sensors, in presence of multiple contacts in arbitrary locations 2) how to estimate the joint torques in such conditions. During my PhD in IIT, we developed the theory of the Enhanced Oriented Graphs (EOG), that makes it possible to compute online the whole-body robot dynamics combining the measurements of force/torque, inertial and tactile sensors. This estimation method has been implemented in the library iDyn, included in the iCub software (now significantly improved in iDynTree thanks to the CoDyCo project).

References: survey paper robotic simulators , Humanoids 2011, ICRA 2015, Autonomous Robots 2012 Software for library and experiments: code (svn) |

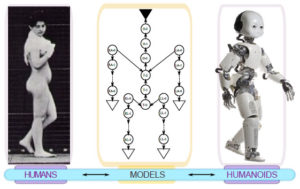

From Humans to Humanoids

Is it possible to transfer some principles of learning and control from humans to robots? Yes, and it is generally a good idea (see survey paper Humans to Humanoids). Is it possible to transfer some principles of learning and control from humans to robots? Yes, and it is generally a good idea (see survey paper Humans to Humanoids).I investigated on this question during my PhD in IIT, where I showed that it is possible to use optimal control to transfer some optimization criteria typical of human planning movements to robot (IROS 2010). We also used stochastic optimal control for controlling the robot movement and its compliance in presence of uncertainties, noise or delays (IROS 2011). Then during my postdoc in ISIR, where we took inspiration from developmental psychology and learning in toddlers to develop a cognitive architecture for the iCub to learn in an incremental and multimodal way objects through autonomous exploration and interaction with a human tutor (TAMD 2014). |